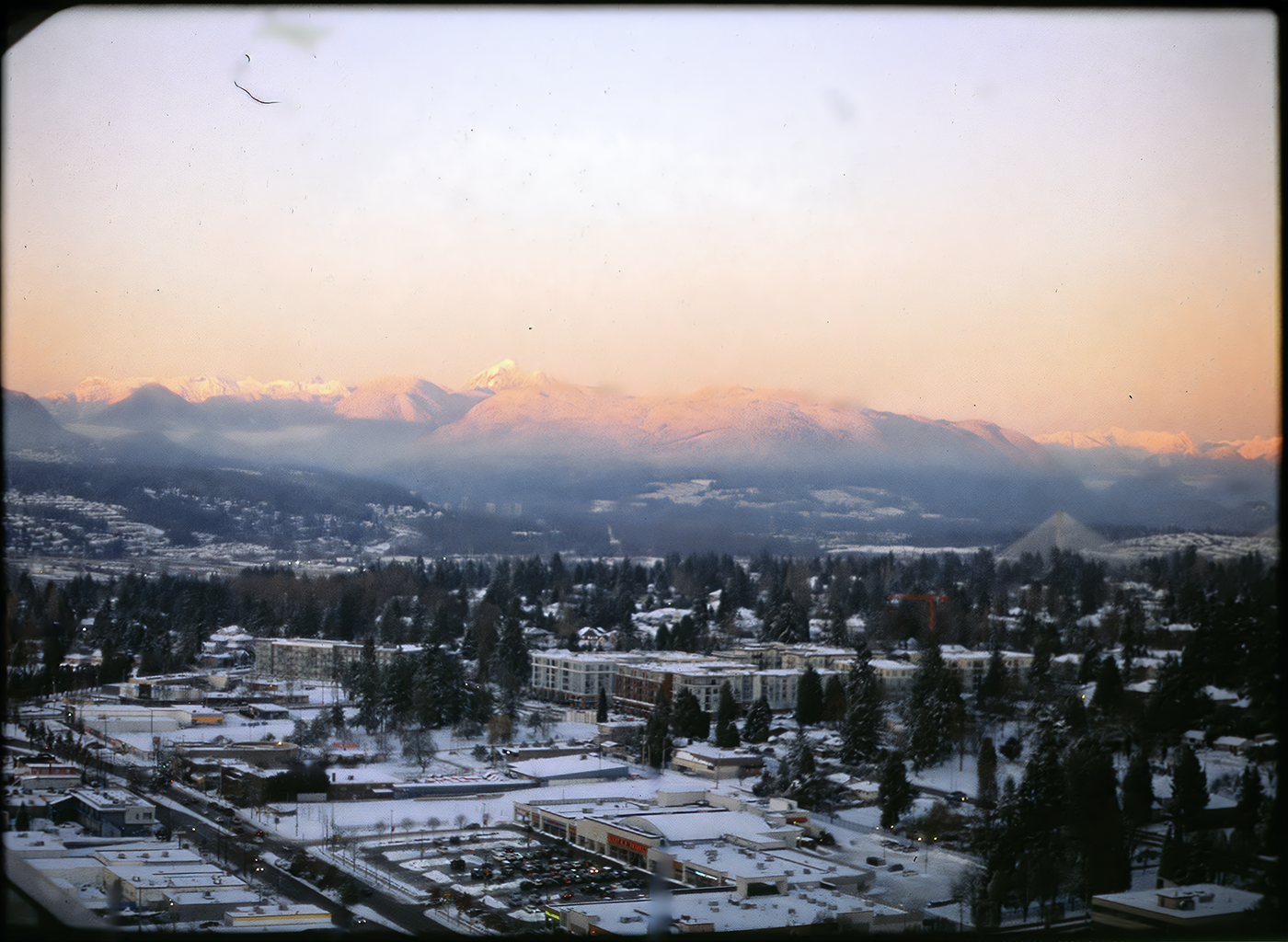

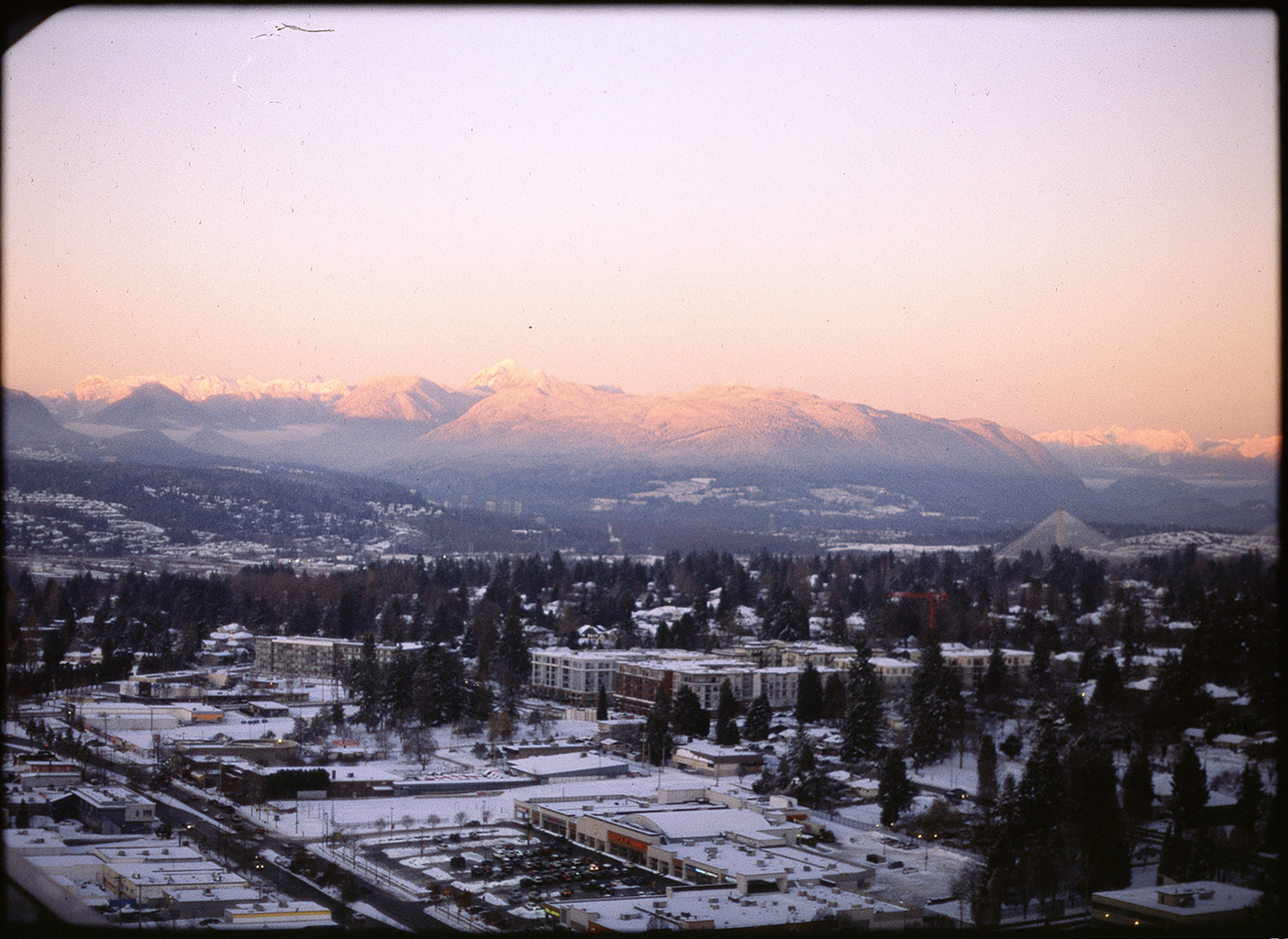

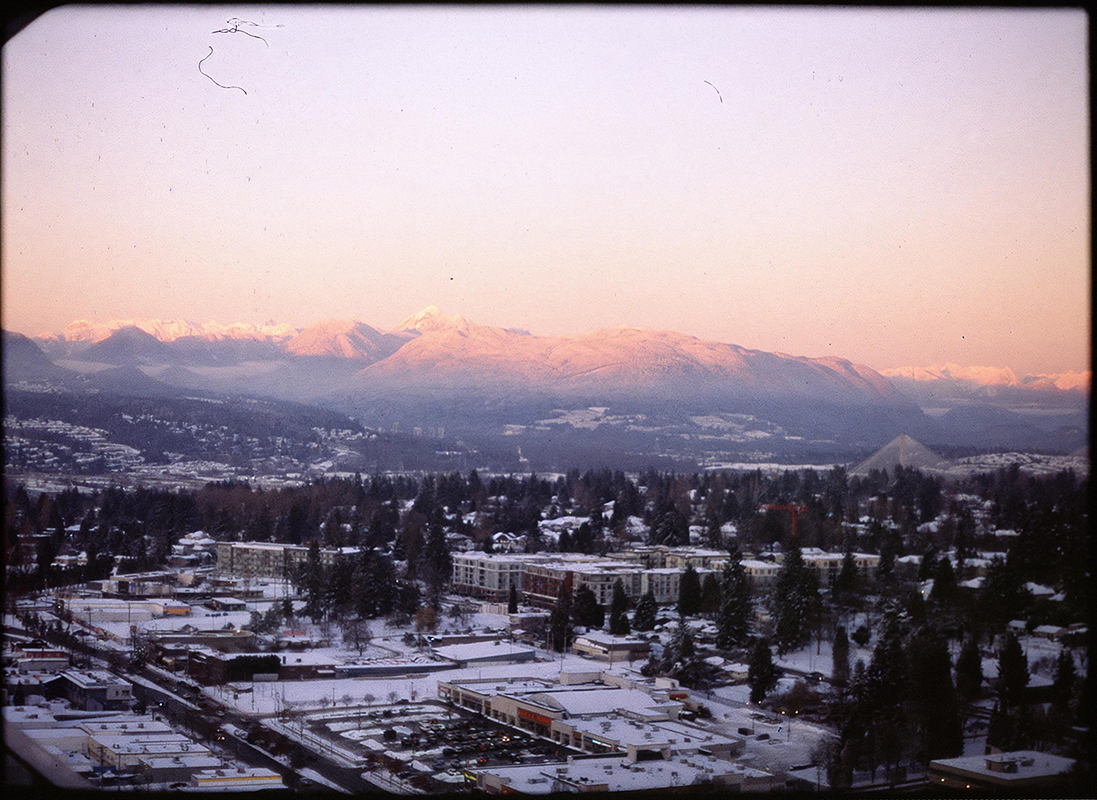

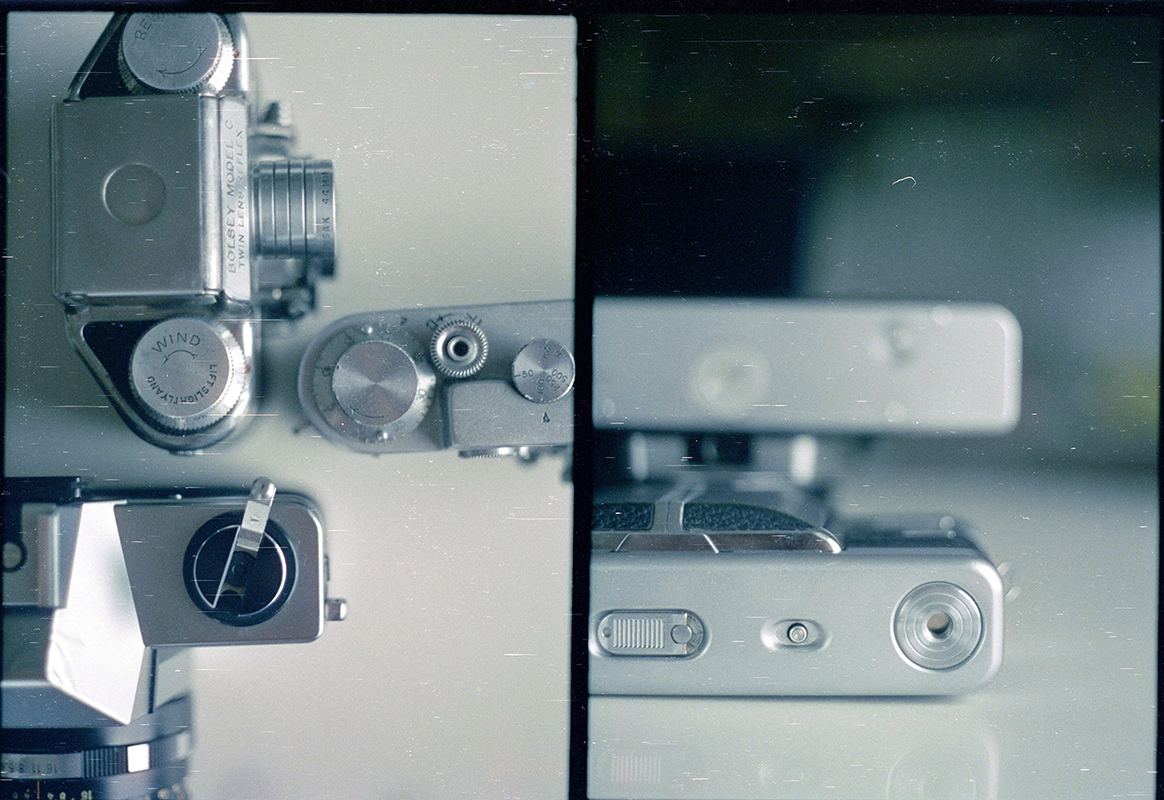

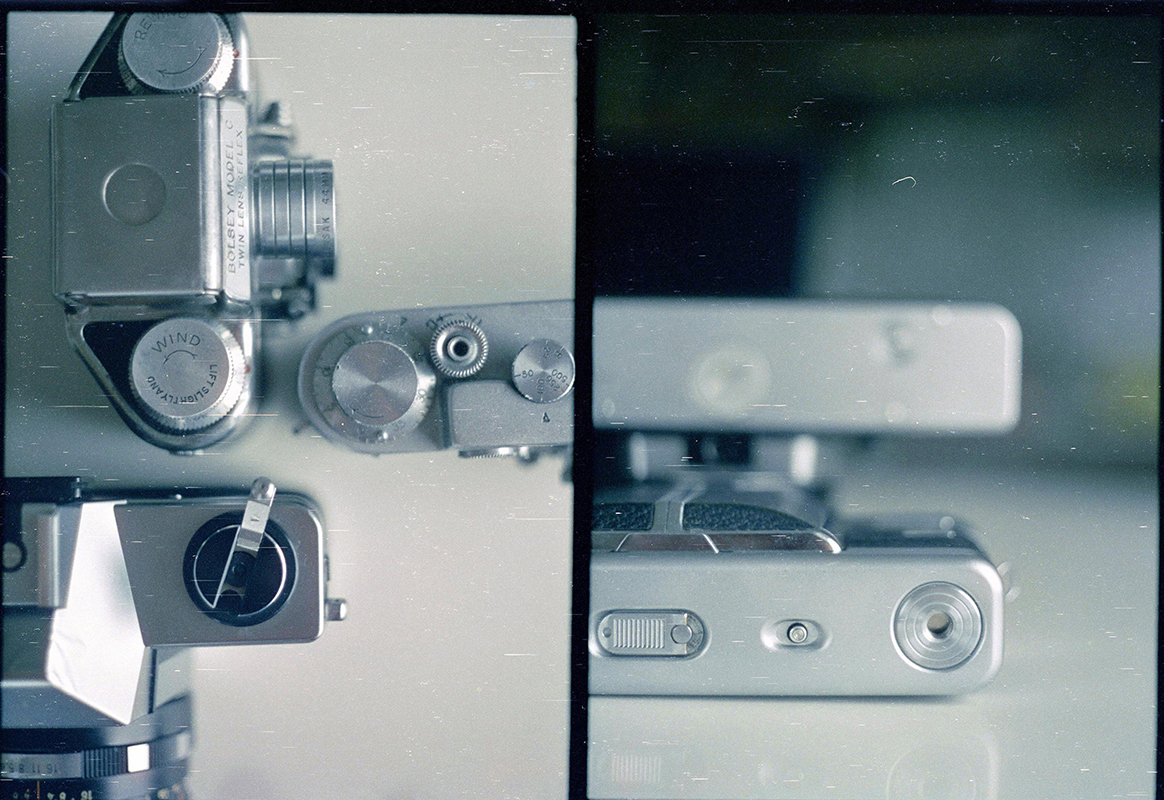

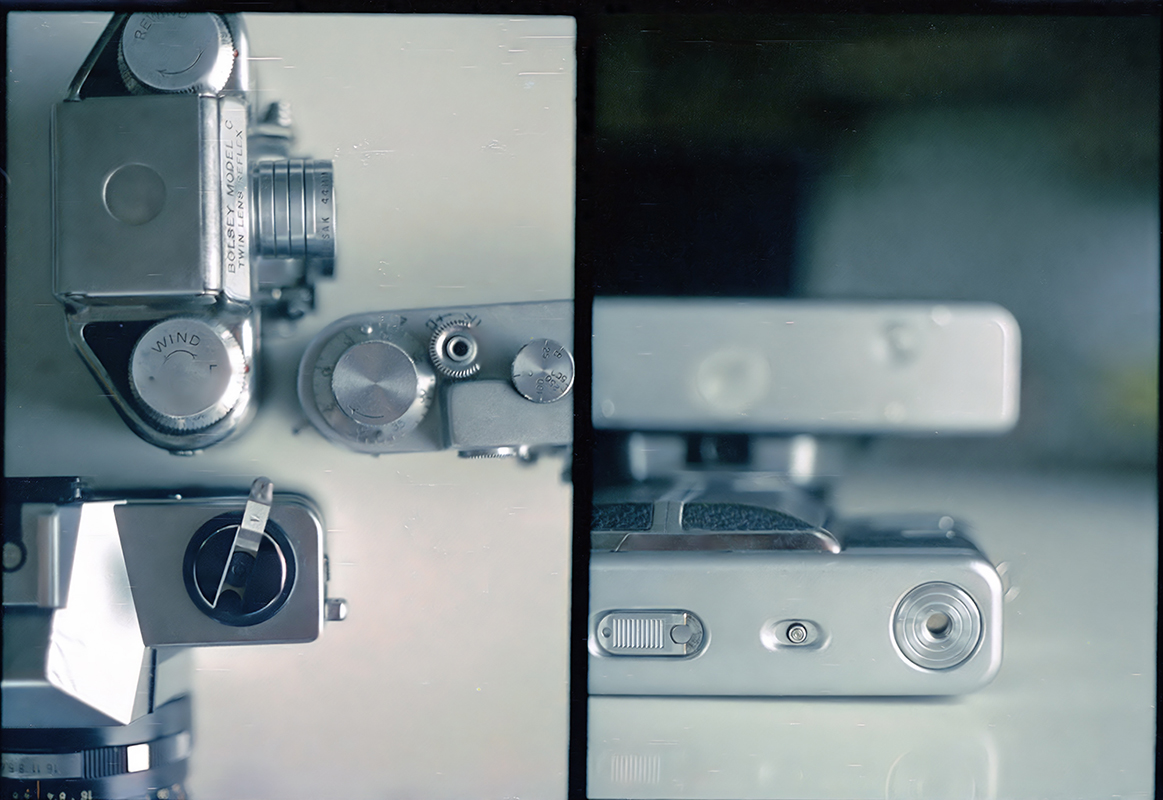

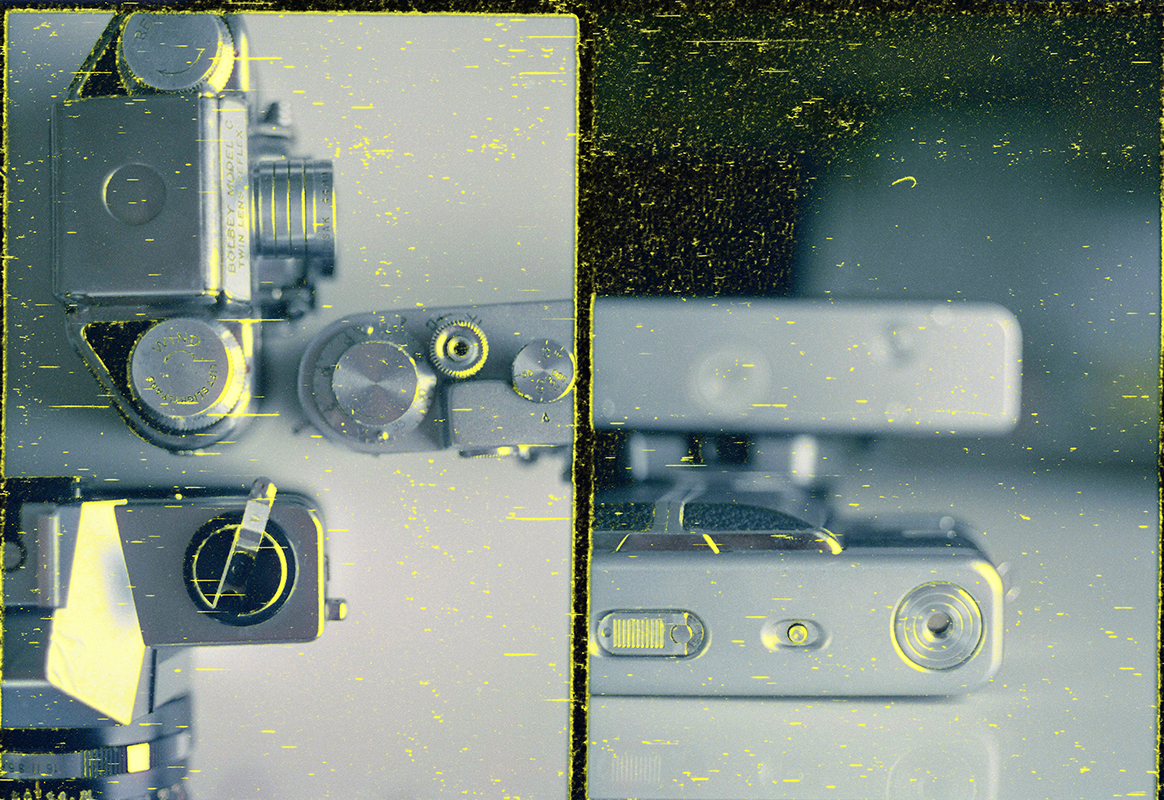

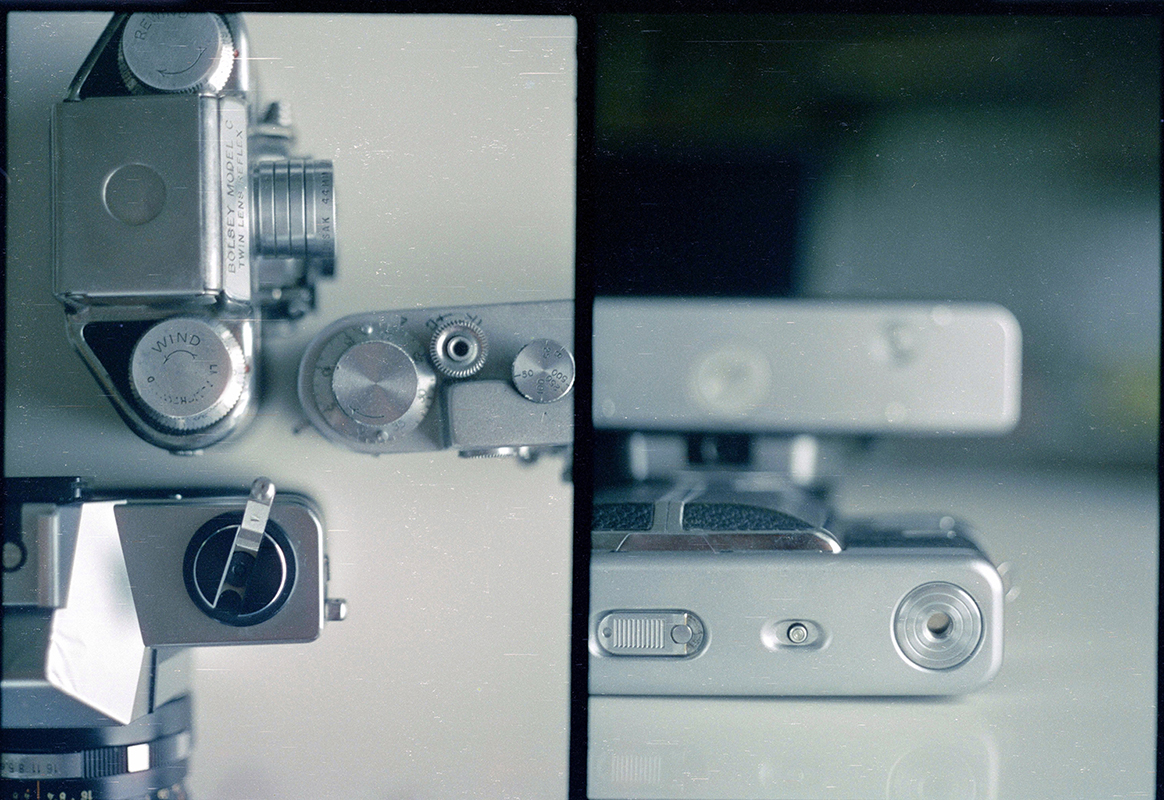

Digital scans of analogue photographic film typically contain artefacts such as dust and scratches. Automated removal of these is an important part of preservation and dissemination of photographs of historical and cultural importance.

While state-of-the-art deep learning models have shown impressive results in general image inpainting and denoising, film artefact removal is an understudied problem. It has particularly challenging requirements, due to the complex nature of analogue damage, the high resolution of film scans, and potential ambiguities in the restoration. There are no publicly available high-quality datasets of real-world analogue film damage for training and evaluation, making quantitative studies impossible.

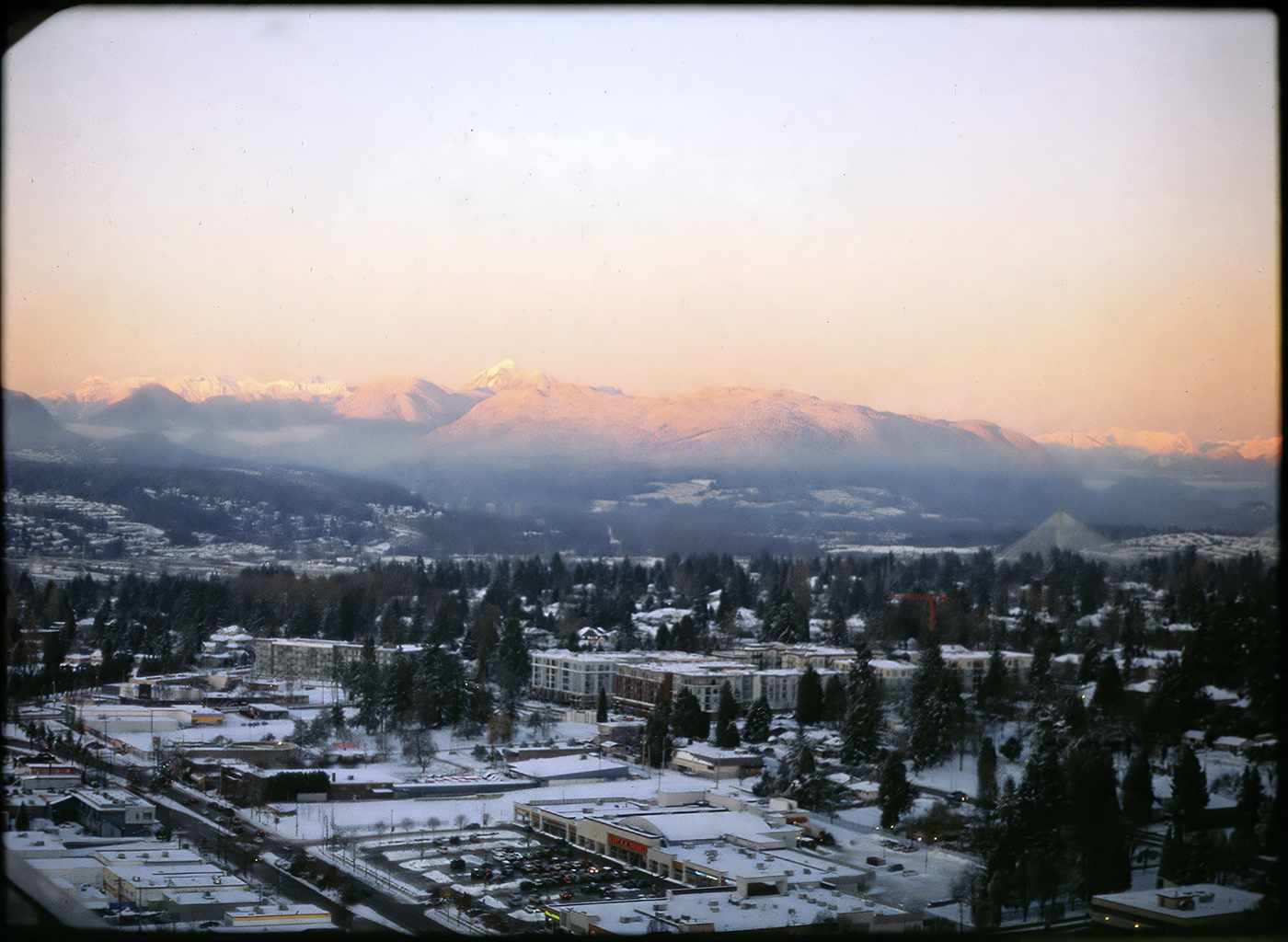

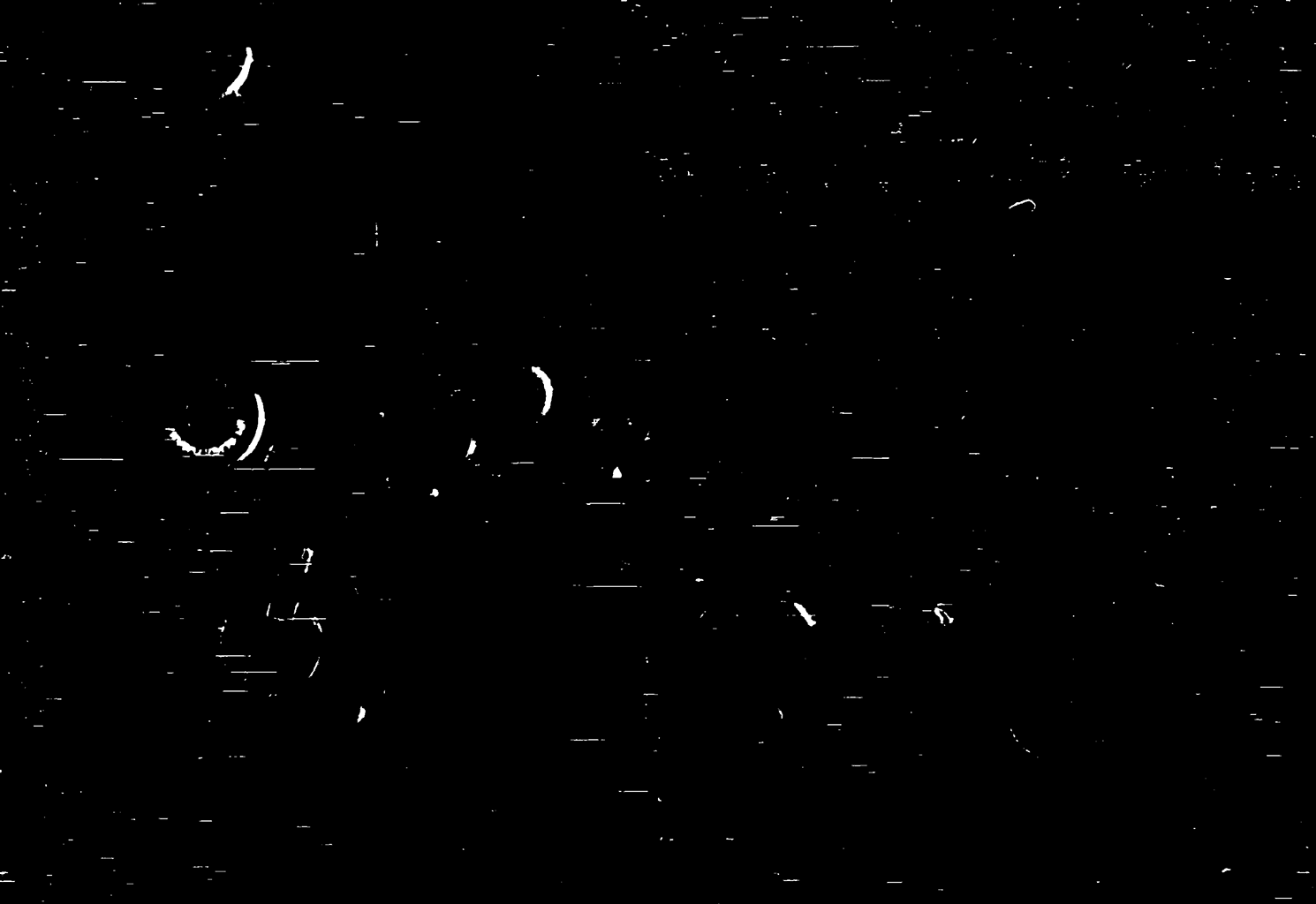

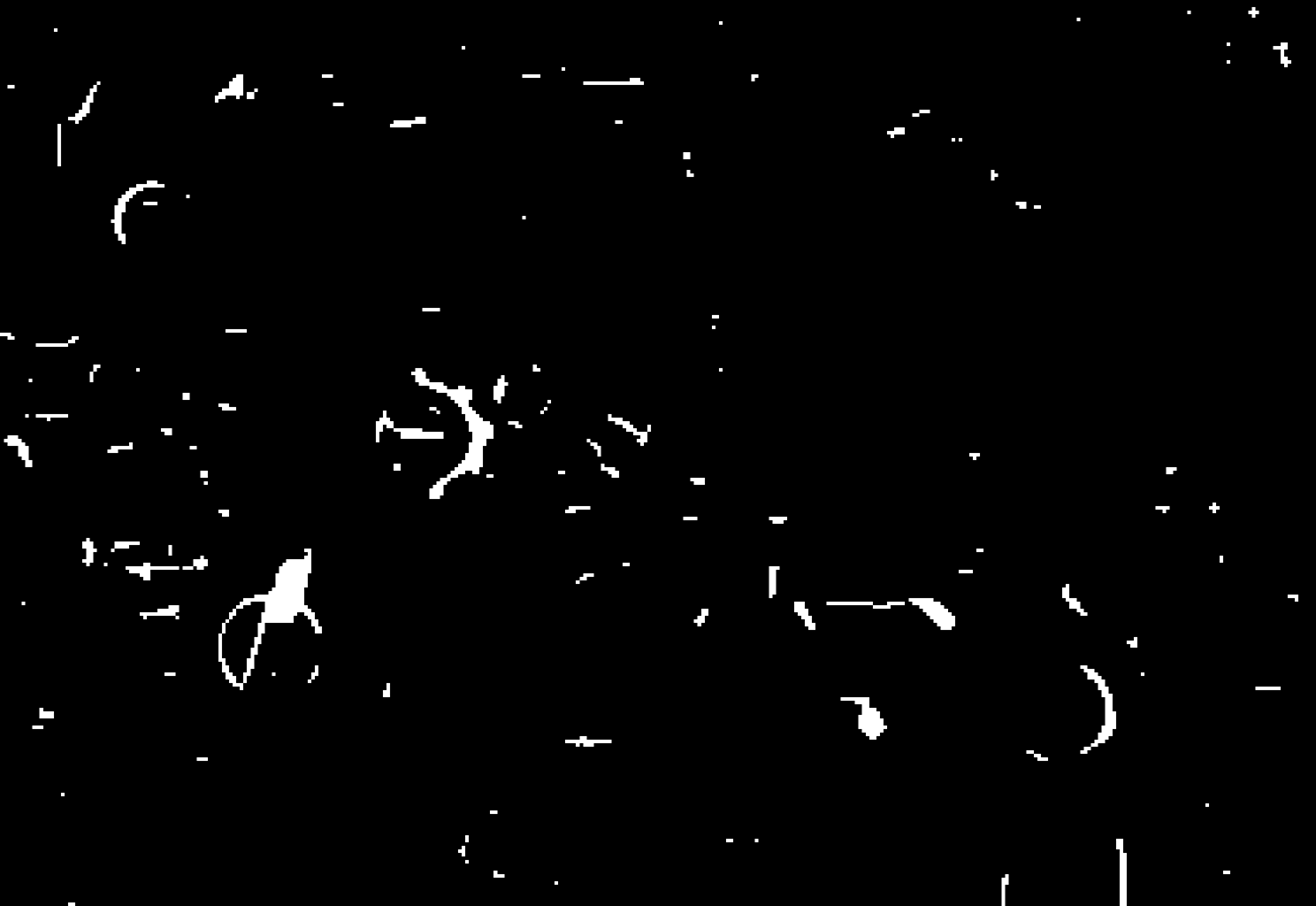

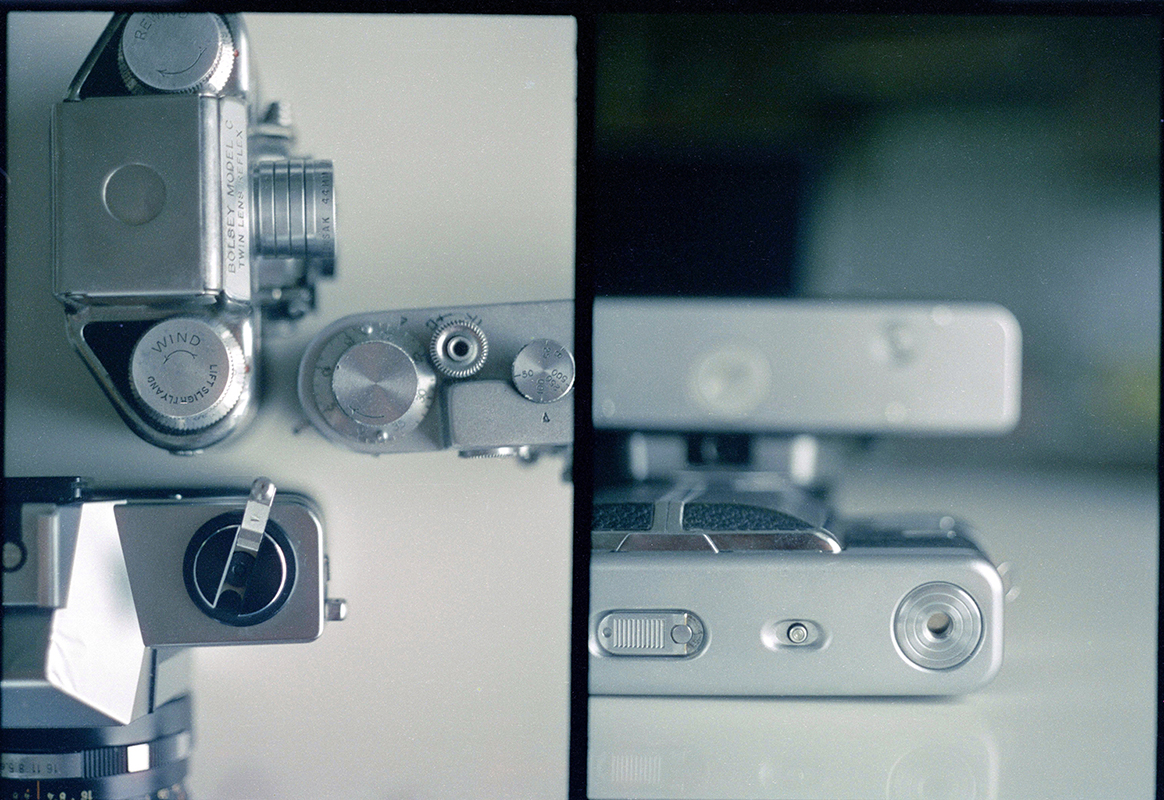

We address the lack of ground-truth data for evaluation by collecting a dataset of 4K damaged analogue film scans paired with manually-restored versions produced by a human expert, allowing quantitative evaluation of restoration performance. We construct a larger synthetic dataset of damaged images with paired clean versions using a statistical model of artefact shape and occurrence learnt from real, heavily-damaged images. We carefully validate the realism of the simulated damage via a human perceptual study, showing that even expert users find our synthetic damage indistinguishable from real. In addition, we demonstrate that training with our synthetically damaged dataset leads to improved artefact segmentation performance when compared to previously proposed synthetic analogue damage.

Finally, we use these datasets to train and analyse the performance of eight state-of-the-art image restoration methods on high-resolution scans. We compare both methods which directly perform the restoration task on scans with artefacts, and methods which require a damage mask to be provided for the inpainting of artefacts.

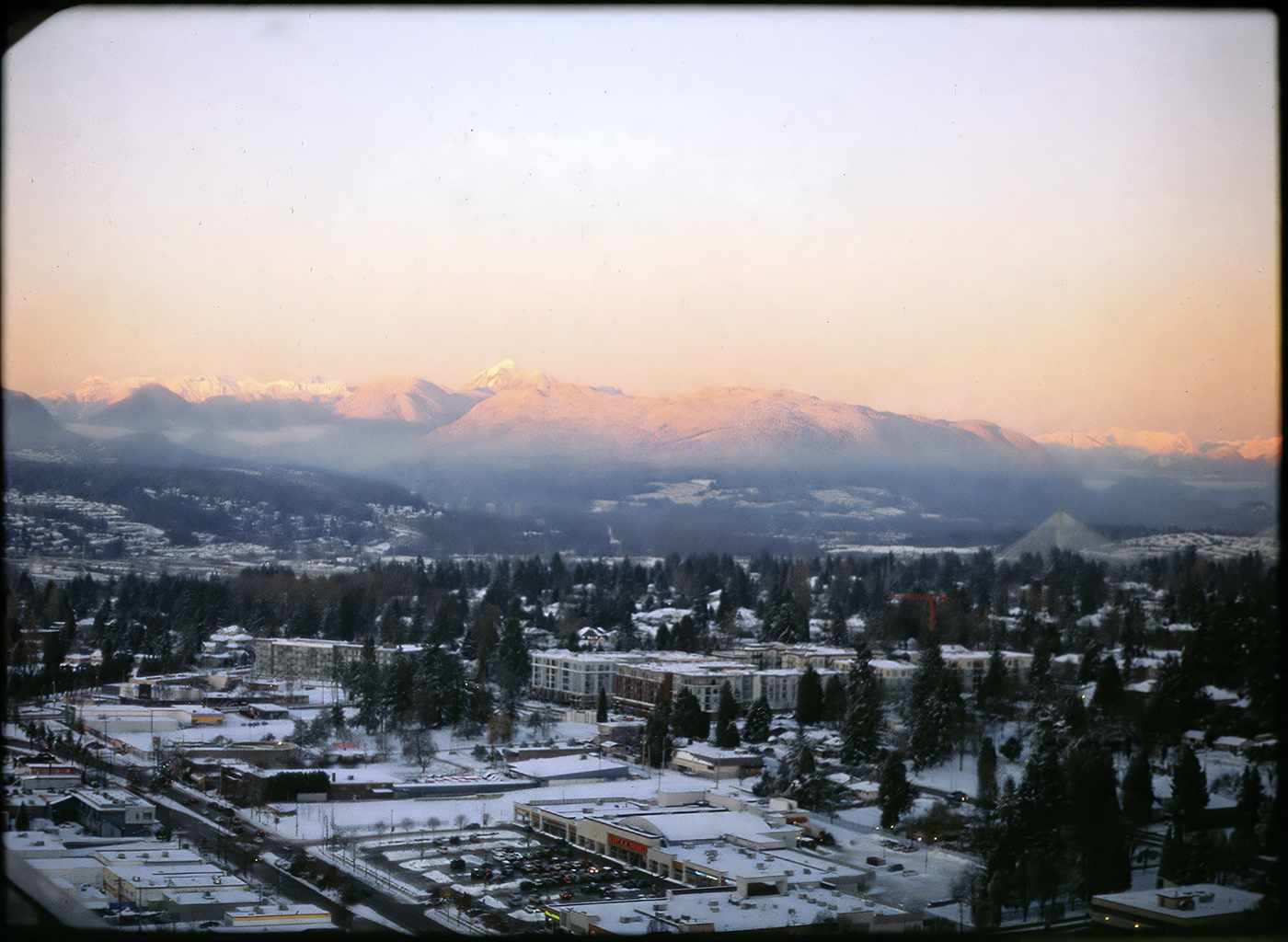

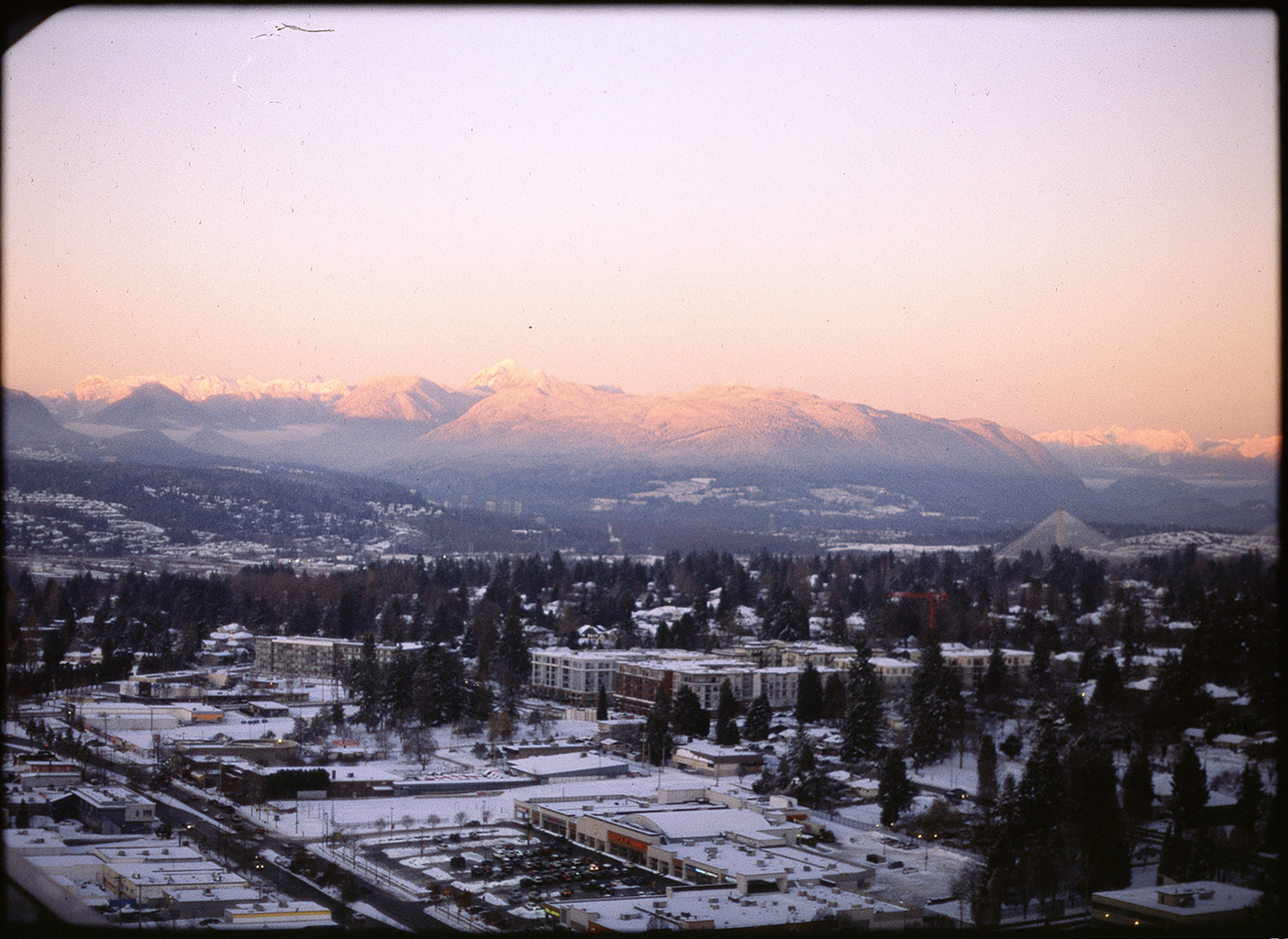

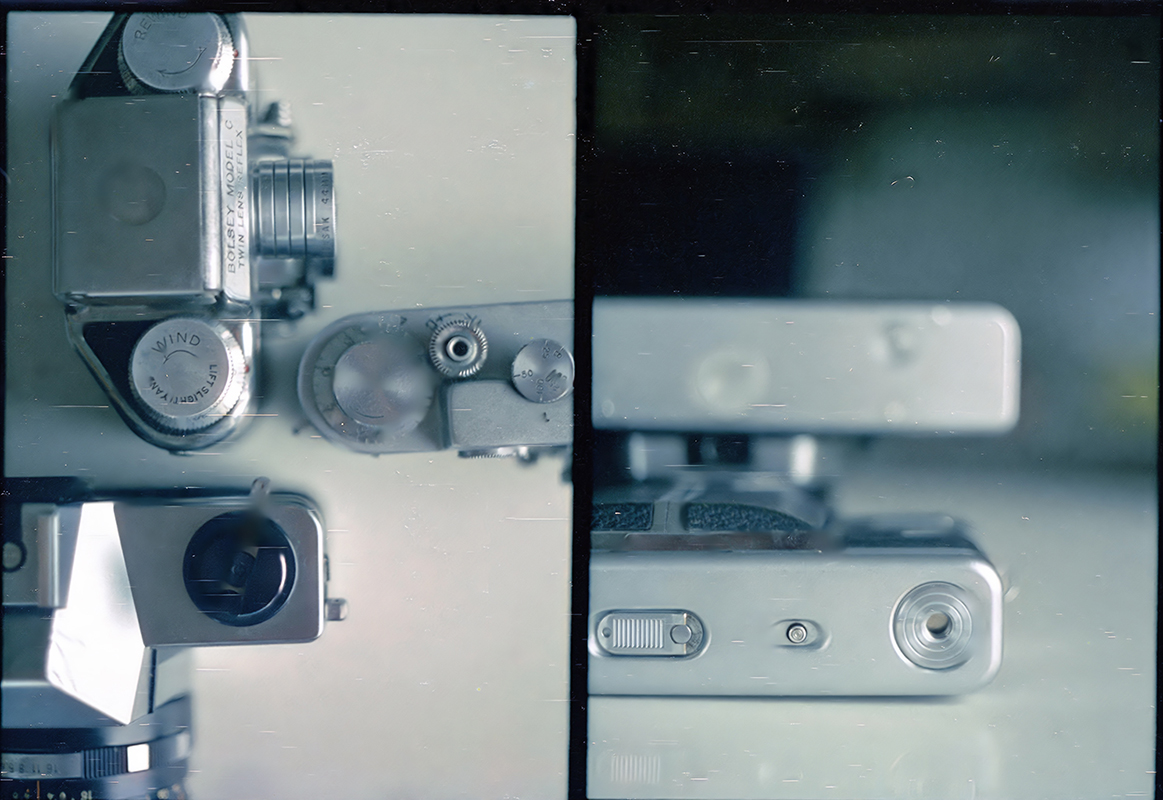

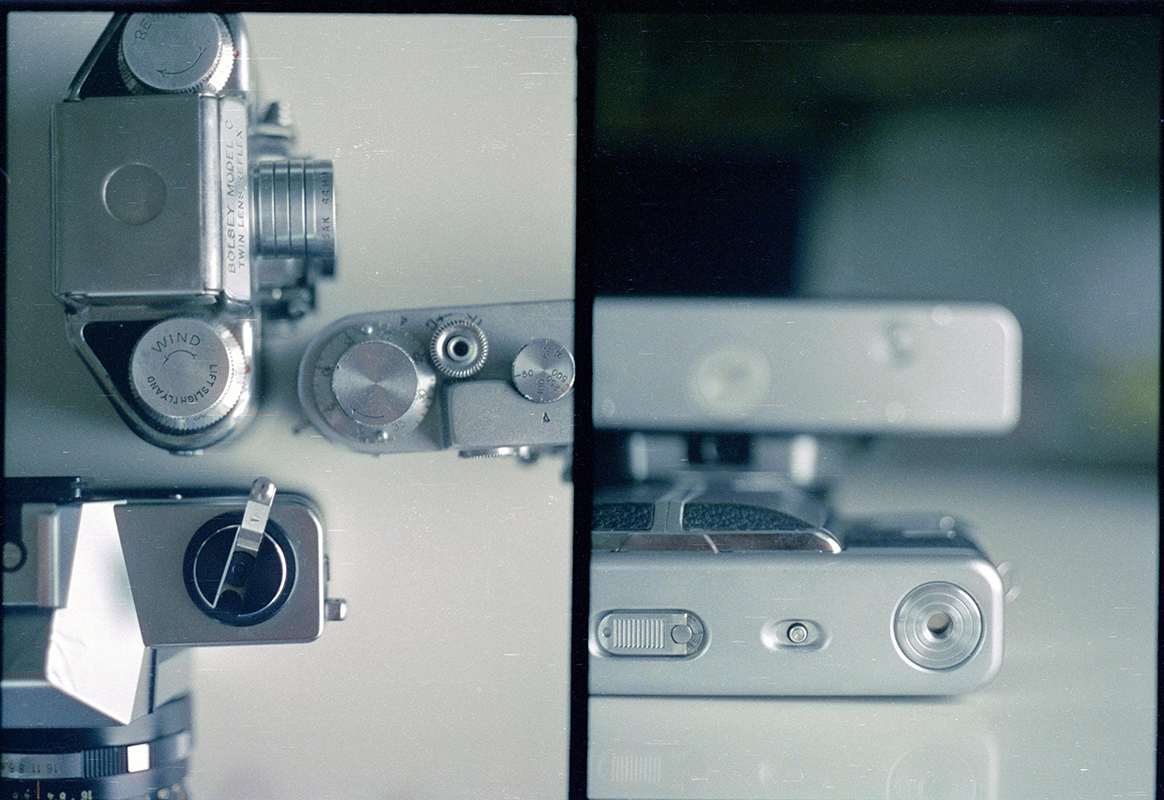

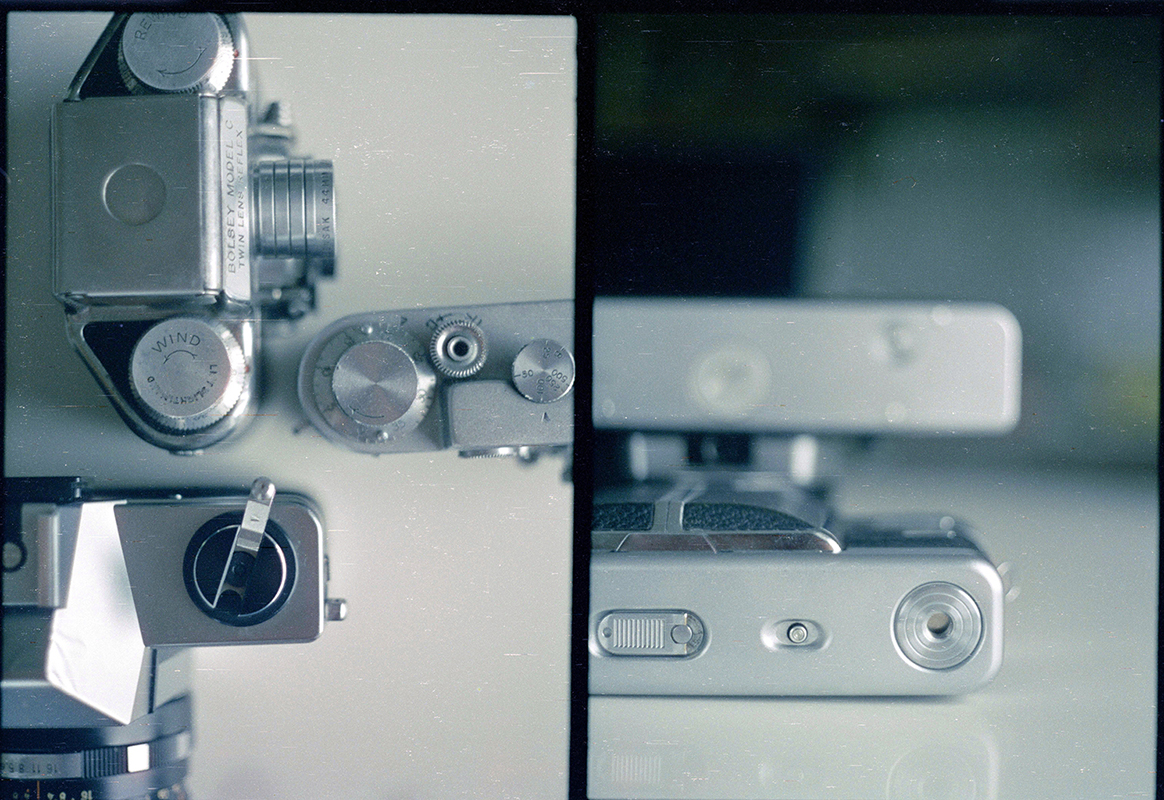

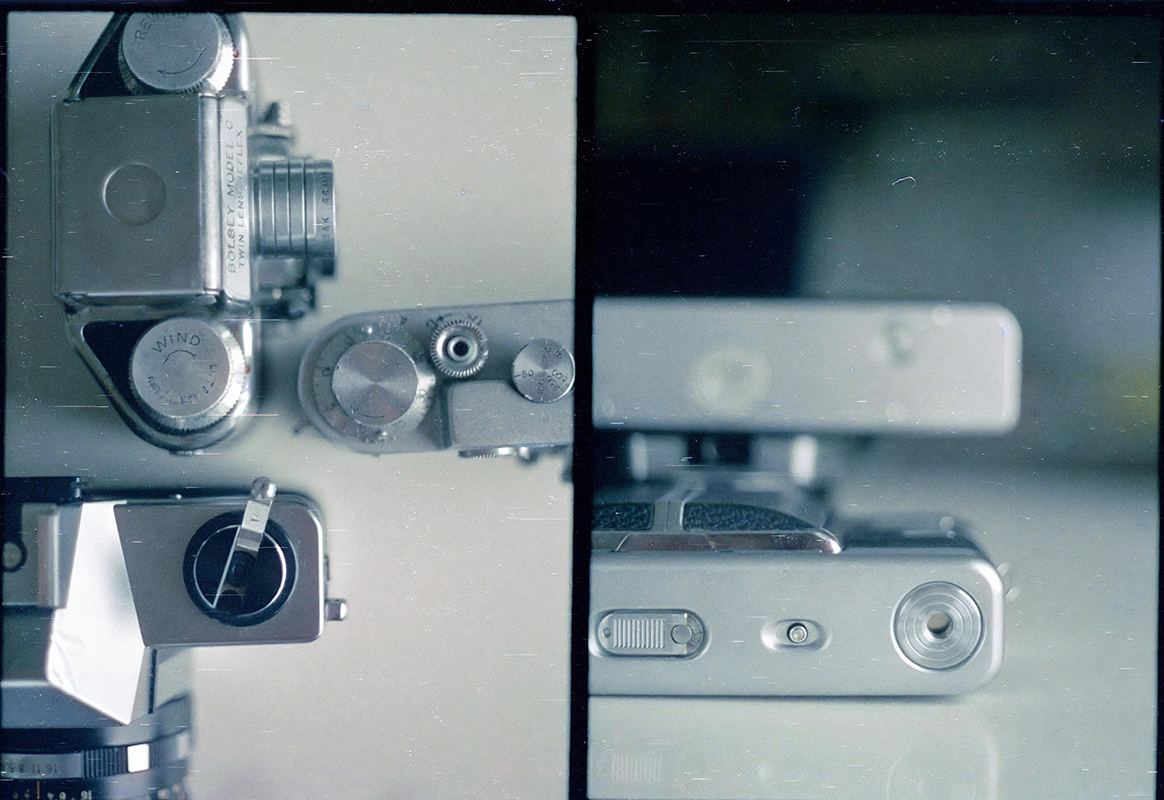

We collected 44 scans of 35mm film of a variety of colour emulsions, including slides and negatives. Damage includes dust, scratches, hairs, and specks. For each damaged scan, a complimentary restored version is provided, where analogue artifacts have been manually restored by an expert. This dataset is used to evaluate existing damage detection and restoration methods.

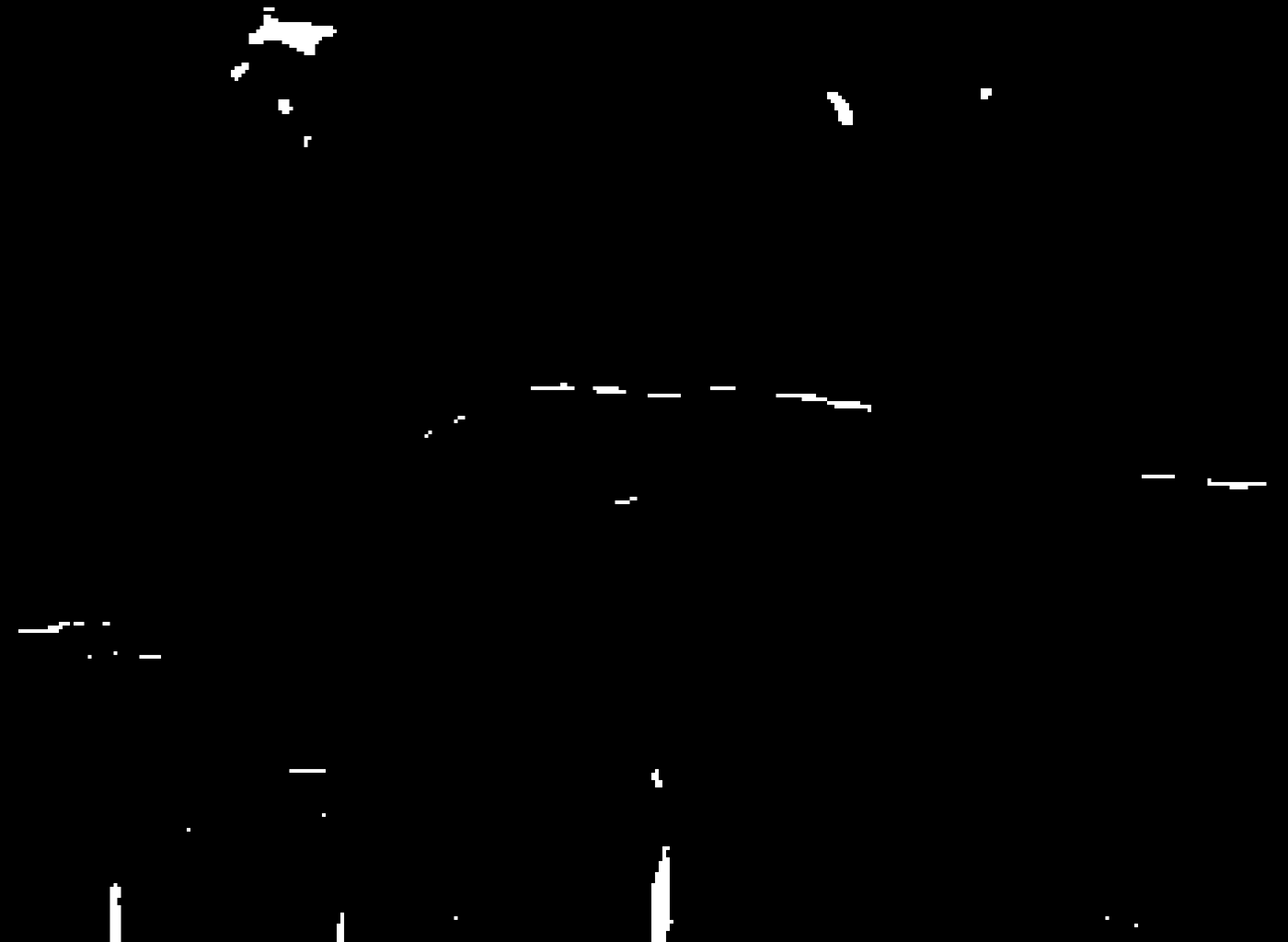

We manually annotate individual artifacts in the scans with bounding polygons, and classify each as dirt, dust, long hair, short hair or scratch. We extract each individual artifact, zero padded to square, to create a bank of isolated artifacts to sample from when generating new overlays. In addition, we use these artefacts to create a statistical model of analogue damage in terms of size, shape, and spatial distribution. In total we have annotated 12135 artifacts across the 10 scanned frames. Annotations for each scan are included in JSON format, and can easily be converted to OpenCV contour format.

@article{ivanova23analogue,

title = {Simulating analogue film damage to analyse and improve artefact restoration on high-resolution scans},

author = {Daniela Ivanova and John Williamson and Paul Henderson},

year = 2023,

journal = {Computer Graphics Forum (Proc. Eurographics 2023)},

volume = {42},

number = {2},

doi = {https://doi.org/10.1111/cgf.14749},

copyright = {Creative Commons Attribution 4.0 International}

}